Gone are the days when translating one language to another required the use of a bilingual dictionary. Nowadays, when you come across words that are in a foreign language, you log on to an online translation platform and get the results instantly. This is basically what machine translation is; an automated process of rendering one language to another. The use of machine translation has become so common that Google Translate reports that it translates over 100 billion words a day.

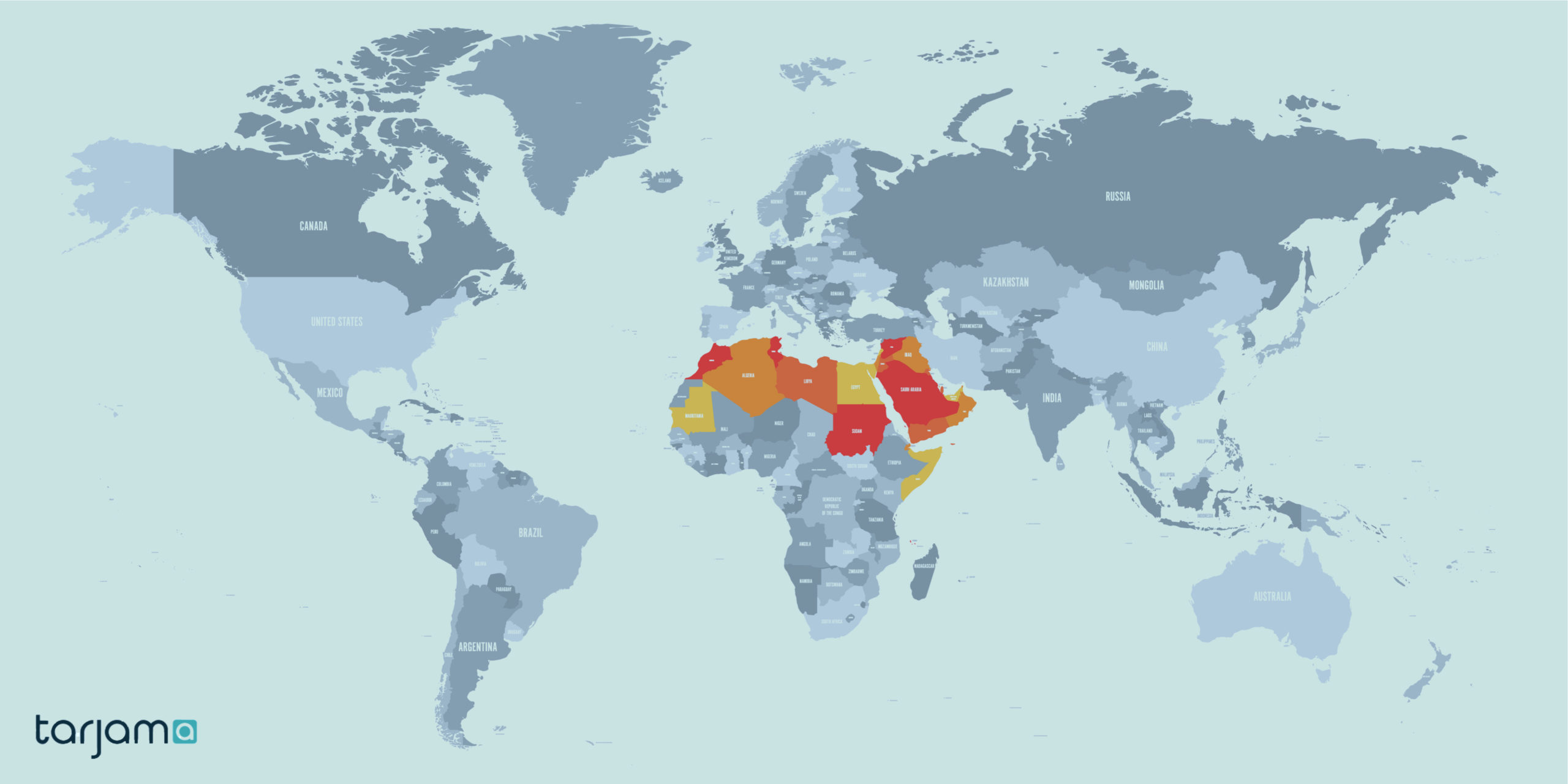

Aside from personal use, machine translation (MT) helps brands and businesses expand their reach to global audiences. Now more than ever, website content is being translated into numerous languages to help break the language barrier. By doing so, they are not only able to expand to new international markets but are also helping to demarginalize groups that were initially not privy to information on the internet.

Machine translation has an edge over human translation due to its speed and cost. With computers, the translation is instantaneous, at less than a third of the cost. Despite the improvement of the output from MT, the general perception by professionals and businesses that it cannot be a substitute for human translation is still prevalent. Some companies opt to integrate their translation process; using MT for initial translation then doing some editorial work to further improve the quality and accuracy. Used correctly, machine translation can expedite the translation process without compromising on the quality of the output content.

When Should I Use Machine Translation?

- Where large volumes of content need to be translated. Translating whole websites or large documents especially when time is a critical factor, solely depending on human translation is often not feasible. Machines can run uninterrupted for long periods, delivering results almost instantly. Human translators can then edit and review the machine translations to ensure that the final content is polished and accurate.

- Where nuances are unimportant. Language in nature often has ambiguous rules; therefore limiting words to specific styles can be difficult. However, the language employed in manuals or software documentation is often straightforward, making the use of MT appropriate. Machine translators work by translating singular words or sentences in parallel. The result is a collection of translated sentences in sequence, not a cohesively translated text. Problems with flow, fluency, and readability are often inherent to machine-translated texts. This is especially true for technical or creative work where the usage of words is mostly contextual.

- Where the translation budget is limited. Companies are always on the lookout for techniques that will reduce their expenditure. This is why many small scale firms turn to machine translation for their work- machines cost less than human labor. To lower costs and maintain the integrity of the translated text, some businesses prefer to use a mix of both machine and human translators.

- Where the content is ephemeral. Content subject to change such as customer reviews, emails, or FAQs are generated quickly and has only one-off usage. Their quality doesn’t need to be as high as that of professional documents. The quality of the translation is also not important when it is to be used for in-house research.

How does Machine Translation Works?

Machine translation works by using software to convert one language (source language) to another (target language). Although it sounds straightforward, complex processes go into making even the most basic of translations possible. Different types of MT systems are in use today:

1. Rules-Based Machine Translation

This was the first commercial translation system to be used. RBMT is based on the premise that languages have grammatical, syntactical, and semantic rules that govern them. These rules are predefined by human experts in both the source and target languages and rely heavily on a robust bilingual dictionary. The translation takes place in three phases: analysis, transfer, and generation.

Building RBTM is often time-consuming and expensive but has higher quality outputs compared to others. The vocabulary used can be updated or edited easily to refine the quality of the translated text. These refinements can help the texts read more fluently and remove the machine-like quality that some tend to have.

RBMT works best when translating between languages whose rules are dynamic and abstract.

2. Statistical-Based Machine Translation

SBT relies on the use of statistics to generate translations based on parameters that are derived from the analysis of existing bilingual sets of texts, known as text corpus. Unlike Rules-Based Machine Translation which is word-based, SBT makes uses of phrases that reduce the rigidity imposed on the algorithm by word to word translation.

The statistical models that are derived from the extensive analysis of the bilingual corpus (original and target languages) and the monolingual corpus (target language) work to define which words or phrases are more likely to be used.

The large volume of texts required to run SBT systems has become more available due to the extensive use of the internet and cloud computing. Although the translated output of SBT has higher fluency compared to RBTM, the statistical-based translated text is less consistent.

3. Neural Machine Translation

Neural Machine Translation is the advanced version of SBT. It makes use of a large artificial neural network that predicts the likely sequence of long phrases and sentences. Unlike statistical-based translation, NMT uses less memory since the models are trained jointly to maximize the quality of the translations.

Neural networks (like the ones in our brains) make use of encoder/ decoder technology. During the learning phase, these networks automatically correct the set parameters by comparing the output to the expected translation and then make the necessary adjustments. This means that they require to be trained by humans in order to work. It involves feeding the program with large volumes of data; a process that takes only a few weeks.

NMT is the most advanced method of machine translation and makes use of complex algorithms such as deep learning and AI. This enables it to learn new languages and can be relied upon to produce consistently high-quality output. NMT is currently in use on successful translation platform, Google Translate.

Advantages of Neural Machine Translation

- Fewer errors compared to other systems. NMT-generated translations require less post-editing work. In fact, the translated texts have 50% fewer grammatical and 17% fewer lexicon errors compared to other MT systems. For this reason, NMT is often used to produce professional translations.

- Uses less data. Typically, NMT calculates the probability of the use of a word or phrase through existing bilingual texts. However, for languages with low resources, it draws parallels from its existing lexicon and uses it to build a translation system, eliminating the need to input new data.

- Enables direct zero-shot translation. When translating between two languages, for example, French and Portuguese, other translation systems will first convert the text to English, then to the target language. This causes significant delays in the translation process. With neural machine translators, however, translations are made directly from the source to the target languages, even where no language translation engine between the two exists. This is powered by deep learning technology.

Disadvantages of Neural Machine Translation

- Clarity is needed in the source text. Just like with other machine translation systems, the original text needs to be clear and coherent to prevent meaningless translations.

- It still requires human input. A significant amount of man-hours must go into training the program before it can work efficiently. In line with the issues of context, human verification of the translated document is important to ensure that the text makes sense. Proofread machine translation or post-edited machine translation as it commonly referred to, is still a long way from becoming obsolete. Not until machines learn to pick up on the subtleties of language will we fully rely on them to produce non-erroneous text.

- Data privacy infringement. NMT works by learning and constantly improving its database. In doing so, any translated data must be stored for future use.

Evaluation of Machine Translation

The quality of the translated content is the most important aspect of translation. This is why linguists and programmers have been trying to create tools that can rate the quality of translations ever since the inception of machine translation in the 50s.

Two approaches can be used while evaluating translations:

- Glass box evaluation– measures the quality of the translation based on the internal mechanisms of the translation system.

- Black box evaluation– based solely on the quality of the output. This is the more commonplace paradigm used by translators today.

The evaluation is based on a predetermined set. The set comprises of the sentences in the source language and their partner texts in the target language. Translated texts are then compared against these sets, and if they are of the same style, a match will be detected.

Types of MT Evaluation

1. Manual Evaluation

Humans read through the final text to check its accuracy. The main pointers during the evaluation are fluency and adherence to the meaning of the source text.

When checking for fluency, the source text is unimportant. The evaluator reads through the translation to ensure that it is free of grammatical or syntactical errors.

Then, the text is compared to the original to ensure that it has not veered too far from the message of the source material.

2. Automatic Evaluation

The score obtained is based on the premise that the output should be as close to human translation as possible. Therefore, automatic evaluation relies heavily on pre-existing translations. To improve the accuracy of the score, the evaluation process should be repeated often due to the dynamic nature of languages and MT systems.

Metrics used in the evaluation include METEOR, NIST, and BLEU. They compare the translated text to the reference material and often work without the need for human interference.

Final Thoughts

Machine translations have improved significantly over the years and are helping many firms localize their content to reach a global audience. Properly used, they can produce high-level output with minimal human input. Although they are far from being used independently, MTs are useful for large volumes of content where human translation may be impossible.

Looking for a reliable and secure Machine Translation for your confidential business material? Book a demo now to see Tarjama’s proprietary MT that enables you to deliver multilingual content quickly, securely, and accurately – geared to incorporate your industry’s jargon and writing style into all your translations.

References

- https://medium.com/sciforce/neural-machine-translation-1381b25c9574

- https://csa-research.com/Insights/ArticleID/90/Zero-Shot-Translation-is-Both-More-and-Less-Important-than-you-think

- https://kantanmtblog.com/2019/04/02/a-short-introduction-to-the-statistical-machine-translation-model/

- https://searchenterpriseai.techtarget.com/feature/Where-are-we-with-machine-translation-in-AI

- https://www.intechopen.com/online-first/machine-translation-and-the-evaluation-of-its-quality