Living organisms across the spectrum of life have their own means of communication. But not all communication is considered language. Language as we know it is uniquely human, and we have developed it so much that today there are more than 7,000 living languages.

Language allows us to think, to act upon external events, and to share complex emotions and ideas. Although it has been difficult for researchers to understand what happens inside the brain as we acquire language, research advancements from the 20th century onwards have given us more insight.

In this article, we investigate how the brain builds and decodes language, as well as how our realities are shaped by practicing more than one linguistic code.

Where is language in the brain?

For language to transpire, we must produce certain sounds or body gestures that communicate meaning. But how does the brain do that?

It has long been suggested that the dominant hemisphere in the use of language is the left hemisphere. This is based on the neuroscience studies which suggest that logical and analytical processing is typically performed on the left half of the brain, while the right half is occupied with emotional and social processing. However, further studies showed that language engages both hemispheres.

Scientists studying neurological impairments related to language were the first to observe this. In recovering stroke patients, they found two forms of aphasia – an inability to comprehend and produce language: Broca’s aphasia and Wernicke’s aphasia. Whereas patients suffering from Broca’s aphasia had difficulty finding appropriate words to use, patients suffering from Wernicke’s aphasia found it difficult to understand language properly, but could still produce language of some kind.

From assessing the aphasias of stroke patients, neurolinguistic research has discovered how the left side of the brain assists in language learning and speech.

It is said that Broca’s area in the left temporal lobe is important for speech, deeper intricacies of languages, decoding sentence meanings, and our physical interactions with our speech. Wernicke’s area, also located in the left temporal lobe, is the “hyperactive librarian of language”. This part holds our mental databases of words, quickly working out things that we hear and giving those things mental meanings. It sends this information to Broca’s area for decoding, organizing into structures, and verbalizing. Therefore, both areas are interdependent in order to ensure our speech flows.

Other approaches to determining where the language function lies in the brain include the Wada test which is typically used with epilepsy patients before undergoing brain surgery.

How does the brain learn languages?

In the late 1800s, the concept of modularity was introduced to neurolinguistics. This proposed that different parts of the brain have different functions, and that these functions are somewhat interdependent. A lot of how language is acquired was also studied through the assessment of people who had lost their language capabilities.

Despite its complexity as a neurological process, learning a language, or multiple ones, is one of the most naturally acquired skills for infants. As a matter of fact, the word infant is derived from the Latin infans meaning being able to speak. As an infant, language is acquired from immersion and develops through several phases:

- The receptive language phase: Infants understand what is being said to them.

- The productive language phase: Infants start making sounds, such as babbling, which eventually form proper words. While babbling is not necessarily derived from language, it is a key stage in language development as it allows infants to practice making sounds with their mouth, often imitating adults speaking. Here, children start learning their language’s phonemes, or different sound units. Studies have shown that even deaf babies can physically babble with their hands from watching others using sign language.

- The telegraphic speech phase: Infants begin to use nouns and verbs only, but not necessarily in grammatical structures. For example, rather than saying “I would like a banana”, a child might say “want banana”.

Throughout the 20th century, scholars have debated on how humans acquire language. Famed behavioral psychologist BF Skinner posited that language is acquired through “associative principles” and “operative conditioning”. This suggests that language is learned through reinforcement and correction.

Noam Chomsky, on the other hand, preferred the notion of “innate language learning” which hypothesizes we’re all born with hardwired structures in our brains that forms the basis for our language learning capabilities – something her referred to as language acquisition device. Thanks to this LAD, we are capable at a very young age of categorizing the words we hear as nouns, verbs, or adjectives.

Different languages shaping different realities

Charlemagne once said: “…to have another language is to possess a second soul.”

While we cannot confirm or deny how much the existential soul is involved in this discussion, linguistics is a core component of culture. The language attached to a certain culture will likely influence the way individuals view the world around them.

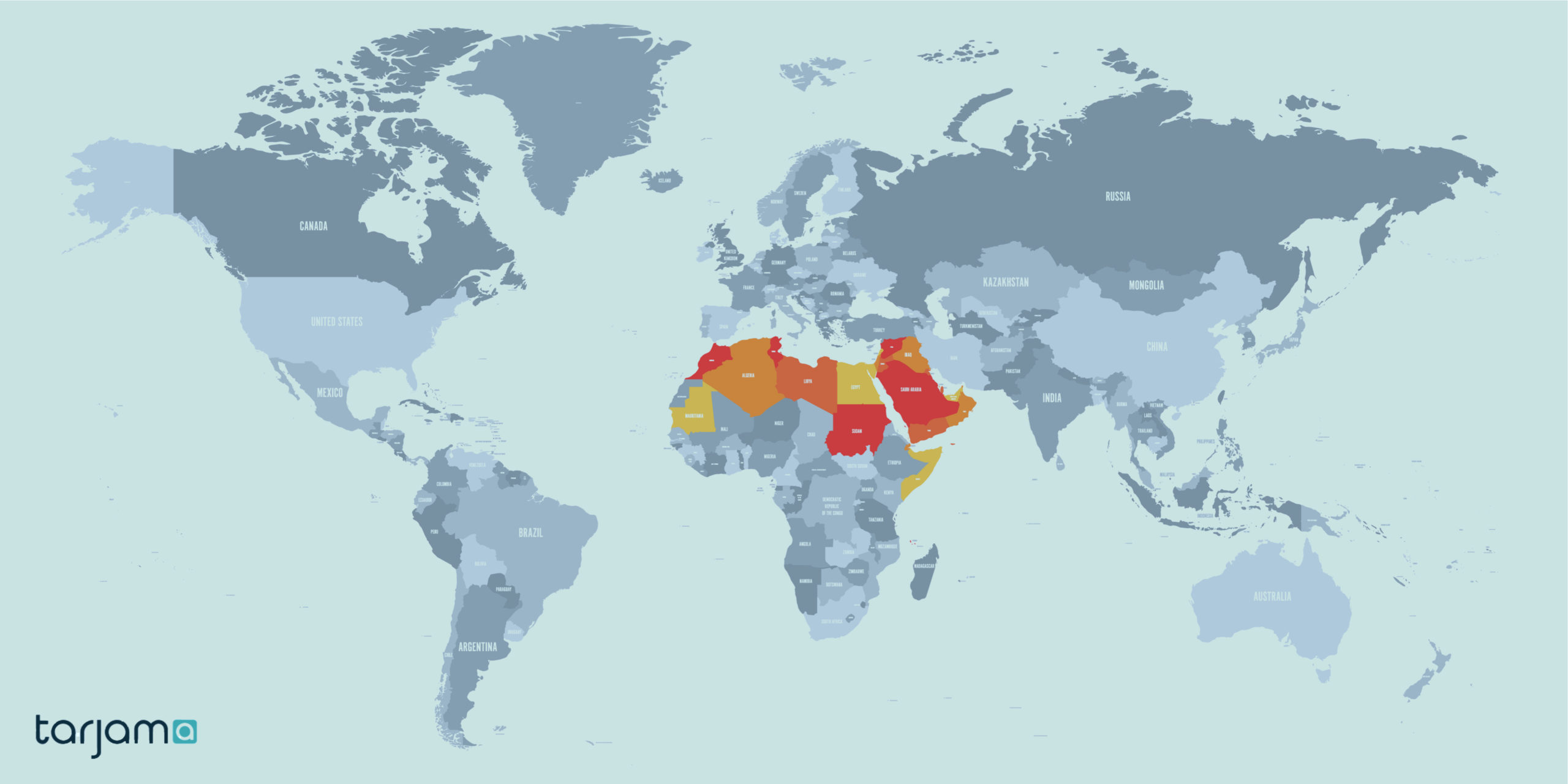

With approximately 7,000 known languages in the world, we see differences that vary from grammatical structures and rules to the words they have for certain situations or feelings which other languages may not have. Comprehending thoughts in different languages is an interesting notion to study, as visuals may play a part in this.

The relationship between the Wernicke and Broca areas of the brain assists in creating mental images assigned to spoken or read words, helping the recipient comprehend them. However, different languages may have different effects on how this happens. Some of the influences that affect how we visualize languages are the formats they are presented in as well as their gender undertones.

For example, Arabic and Hebrew are read from right-to-left. Linguistic researchers have found that writers of this language may chronologically envision timelines differently to those who read and write from left-to-right. Some languages also support the male/female gender dichotomy. This is particularly noticeable in languages such as Spanish or German. In Spanish, the moon (la luna) takes on the female article whereas in German, the moon (der Mond) takes on a masculine meaning. When asking both groups of speakers how they would describe the moon, scholars found that Spanish speakers would use more delicate, effeminate adjectives whereas German speakers would describe the Moon in more stereotypically masculine ways.

How we view events can also be shaped by the language we are speaking. For example, the English language tends to construct sentences with multiple objects and subjects. If someone accidentally knocked a cup from a desk, the English speaker would recount this by saying: “He knocked the cup from the desk.” However, in some romance languages, speakers would be more inclined to recount the same scenario as: “The cup fell from the desk.” As the incident was an accident, the human element is removed. By this token, the language chosen at that moment guides reasoning. This makes things particularly complicated for people of different native tongues to recall the same incidents in possible eyewitness testimonies.

The effects of multilingualism on the brain

Before the 1960s, being bilingual was considered a shortcoming, that it might limit an individual’s ability to think clearer than the monolingual brain.

The differences between multilingual and monolingual brains are still widely researched. What is true is that speaking more than one language affects the working of and appearance of brains compared to those who speak one language.

However, being bilingual may mean that different parts of the brain are used differently for different languages. There are two active parts of language use (speaking and writing) and two passive parts (listening and reading). A balanced bilingual has equal abilities in at least two parts but most bilinguals only use the two in varied ways depending on how they learned the languages.

Of the many benefits of being multilingual, it is thought that multilingual brains are generally better wired, using both left and right hemispheres of the brain to decipher language. This continuous brain exercise is thought to help delay Alzheimer’s disease and dementia in the future.

The benefits of building bilingual vocabulary at a younger age

The critical period hypothesis asserts that young children learn languages quicker than adults due to the plasticity of their developing brains allowing them to use both brain hemispheres.

Although there is a myth that older people cannot begin learning new languages, this is not true. What is true is that the brain has developed a specific type of learning that makes unpacking language rules more rigid. This can be seen through different learning methods of different types of bilinguals.

“Compound bilingual” – Infants who acquire two languages at once.

“Coordinate bilingual” – Older children/young adults/teenagers who develop bilingualism, managing two sets of concepts at once, speaking one language during the day, and a different language at home.

“Subordinate bilingual” – Bilinguals (perhaps older) who learn secondary language through their native tongue. It is still possible that they may become bilingual, but they are doing it through one linguistic code they have spent their life developing.

Everyone can become bilingual, but the means to mastering more than one language may differ. At Tarjama, we combine language AI with top professional talent to translate content in 55+ language pairs. Get an instant quote for our tech-powered translation services.